Suddenly, “open source” is the latest buzzword in AI circles. Meta has pledged to create open-source artificial general intelligence. And Elon Musk is suing OpenAI over its lack of open-source AI models.

Meanwhile, a growing number of tech leaders and companies are setting themselves up as open-source champions.

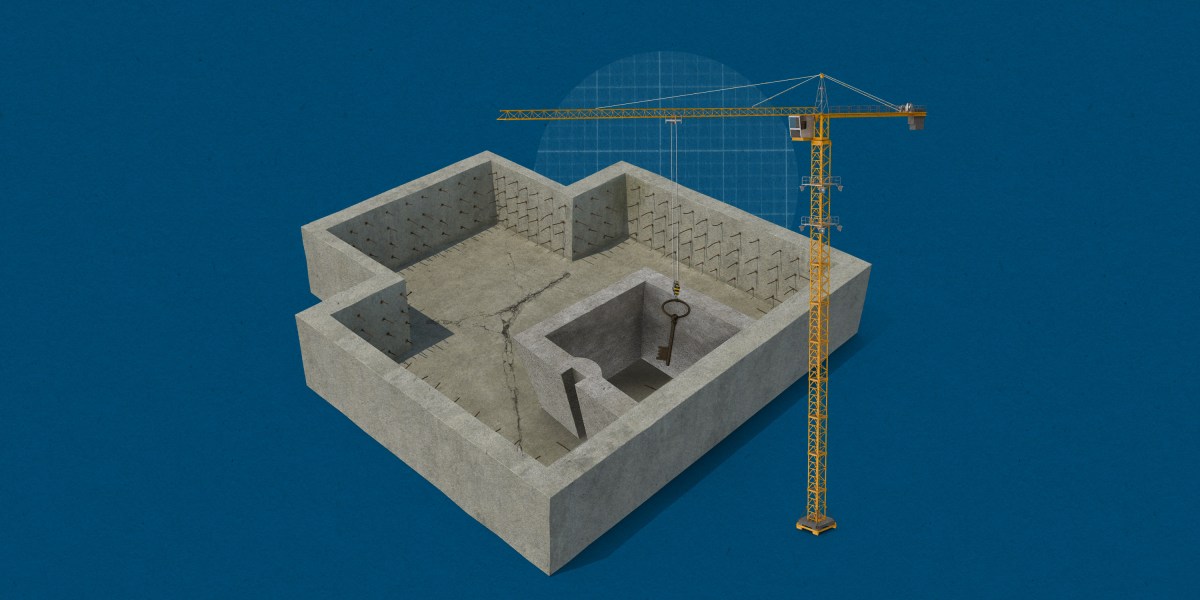

But there’s a fundamental problem—no one can agree on what “open-source AI” means. In theory, it promises a future where anyone can take part in the technology’s development. That could accelerate innovation, boost transparency, and give users greater control over systems that could soon reshape many aspects of our lives.

But what even is it? What makes an AI model open source, and what disqualifies it? Whatever the answers are, they could have significant ramifications for the future. Read the full story.

—Edd Gent

Apple researchers explore dropping “Siri” phrase & listening with AI instead

The news: Researchers from Apple are probing whether it’s possible to use artificial intelligence to detect when a user is speaking to a device like an iPhone, thereby eliminating the technical need for a trigger phrase like “Siri,” according to a new paper.

How they did it: Researchers trained a large language model using both speech captured by smartphones as well as acoustic data from background noise to look for patterns that could indicate when they want help from the device. The results were promising—the model, which was built in part with a version of OpenAI’s GPT-2, was able to make more accurate predictions than audio-only or text-only models, and improved further as the size of the models grew larger.

Why it matters: The paper is one of a number of recent signals that Apple, which is perceived to be lagging behind other tech giants like Amazon, Google, and Facebook in the artificial intelligence race, is planning to incorporate more AI into its products. Read the full story.

Recent Comments