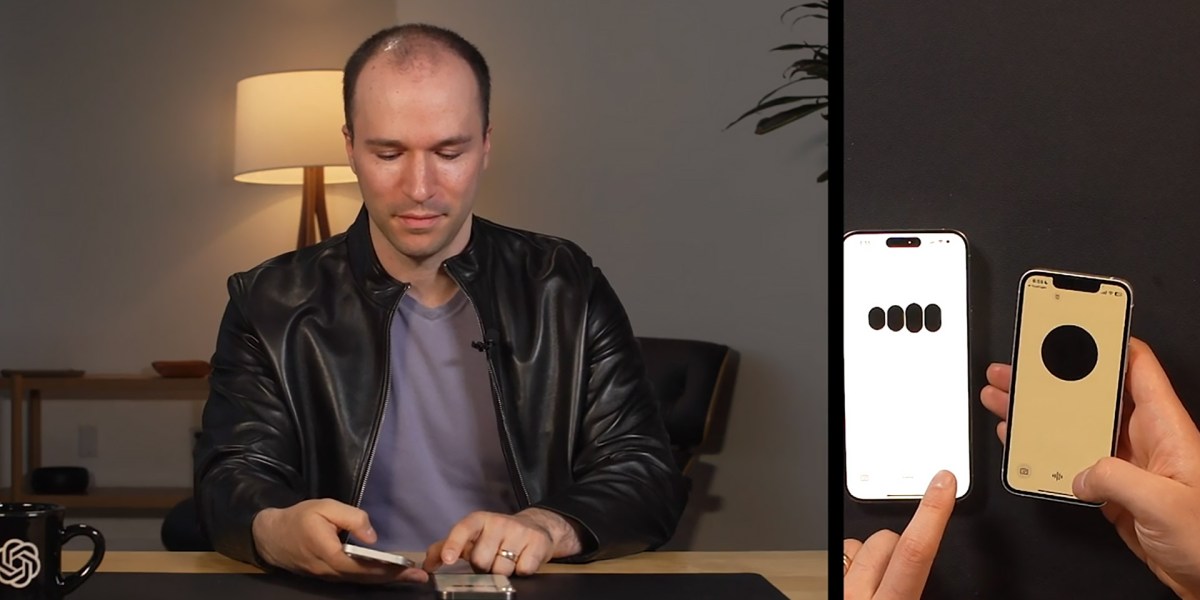

The news: OpenAI just debuted GPT-4o, a new kind of AI model that you can communicate with in real time via live voice conversation, video streams from your phone, and text. The model is rolling out over the next few weeks and will be free for all users through both the GPT app and the web interface, according to the company.

How does it differ to GPT-4? GPT-4 also gives users multiple ways to interact with OpenAI’s AI offerings. But it siloed them in separate models, leading to longer response times and presumably higher computing costs. GPT-4o has now merged those capabilities into a single model to deliver faster responses and smoother transitions between tasks.

The big picture: The result, the company’s demonstration suggests, is a conversational assistant much in the vein of Siri or Alexa—but capable of fielding much more complex prompts. Read the full story.

—James O’Donnell

What to expect at Google I/O

Google is holding its I/O conference today, May 14, and we expect them to announce a whole new slew of AI features, further embedding it into everything it does.

There has been a lot of speculation that it will upgrade its crown jewel, Search, with generative AI features that could, for example, go behind a paywall. Google, despite having 90% of the online search market, is in a defensive position this year. It’s racing to catch up with its rivals Microsoft and OpenAI, while upstarts such as Perplexity AI have launched their own versions of AI-powered search to rave reviews.

While the company is tight-lipped about its announcements, we can make educated guesses. Read the full story.

—Melissa Heikkilä

This story is from The Algorithm, our weekly AI newsletter. Sign up to receive it in your inbox every Monday.

Recent Comments